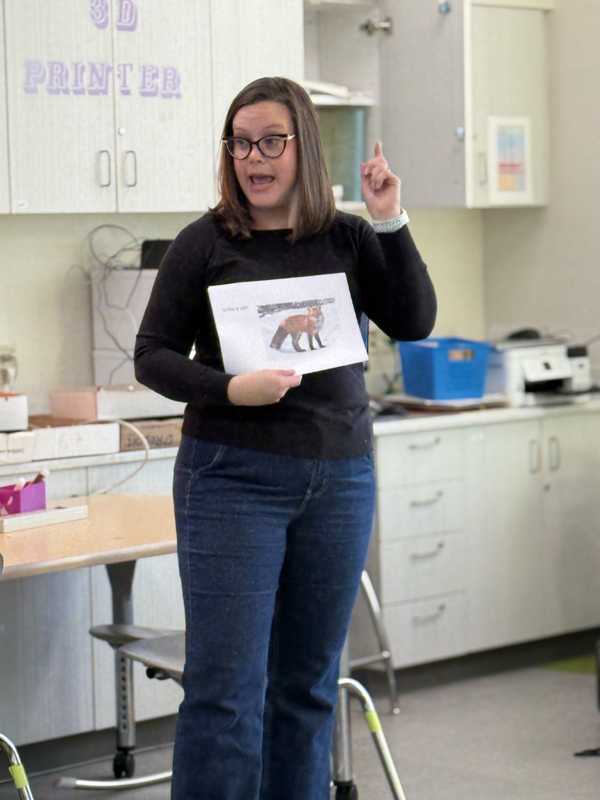

Chagrin Falls fifth graders recently spent time learning about a topic many adults are still trying to understand: artificial intelligence (AI). Taught by Technology Coach, Molly Klodor, students learned that artificial intelligence is a type of technology that learns from data and finds patterns to solve problems. It does not think like a human. It does not “know” things in the way people do. It makes “guesses” based on what it has been taught.

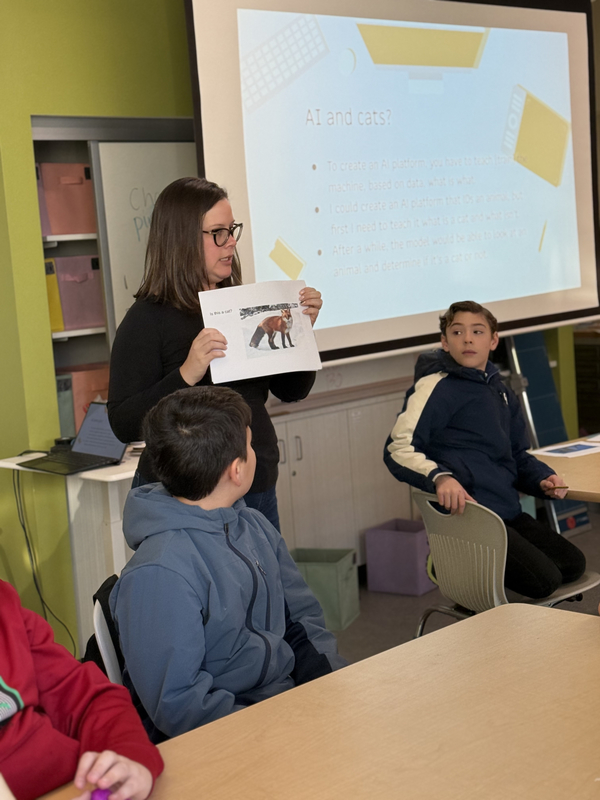

To make the idea clear, Mrs. Klodor used a simple example: cats. Students first identified various animal photos as cats or non-cats. Using Teachable Machine, a school-safe tool for creating machine learning models, students then set parameters to train AI by uploading images and defining them as a cat or non-cat. They then tested the AI’s understanding of what a cat is using other photos and even their faces, some were surprisingly cat-like! Students experienced firsthand how a machine learning system could be trained to identify whether a picture shows a cat or not and the more data available to the system, the better AI will do in making a correct guess.

“That example really clicks for students,” Klodor said. “They identify pretty quickly that if you don’t train the AI well, it will get things wrong. And that leads to good conversations about the limitations of AI.”

Students also learned that not all AI is the same. Some tools, like robot vacuums or voice assistants, respond to commands or follow patterns. Others, known as generative AI, create something new, including images, songs and text. Students were asked to identify if a song was a human or AI creation and if a photo was real or AI. While the song proved harder to identify, students observed numerous details in the photos which indicated AI generated images.

When exploring AI within a classroom setting, a key element of each lesson is focused on recognizing that AI may produce an answer that sounds confident but is incorrect. This is sometimes called a “hallucination,” a term students learn and discuss in age-appropriate ways.

“We want students to know that AI can sound very sure of itself even when it’s wrong,” Klodor explained. “That’s why it’s so important for kids to question it, check other sources, and think for themselves.”

The district’s approach to AI education is built in the reality that students are already aware of these tools; ignoring them fails to prepare students for their future. Instead, we provide guided, developmentally appropriate experiences that allow students to see firsthand how bias, missing information, or poor examples can lead to inaccurate results. These experiences help build critical thinking skills that will matter far beyond the classroom.

“Our goal isn’t to outsource our learning to AI” Klodor said. “It’s teaching them to be thoughtful, ethical users who understand AI’s limits.”